I started the year using Gemini Pro to take our integration patterns and covert them into “playing card” style pattern library so that we can print and play! The content is mine, I used AI to generate the images with still a few issues with image text – oh well, maybe something to refine later in 2026 or next year

Based on our last post we notice that not every integration is real-time and bulk/batch patterns take an integration engineer closer to data/ETL engineers. So these patterns cover bulk/Delta movement and notification-style messaging that avoids rebuilding whole state and are for integrations engineering learning about data patterns

Included patterns

- DSP-1 — Stream Processing to Materialized View

- DSP-2 — Backfill and Replay

- MSG-1 — Claim Check for Large Payloads

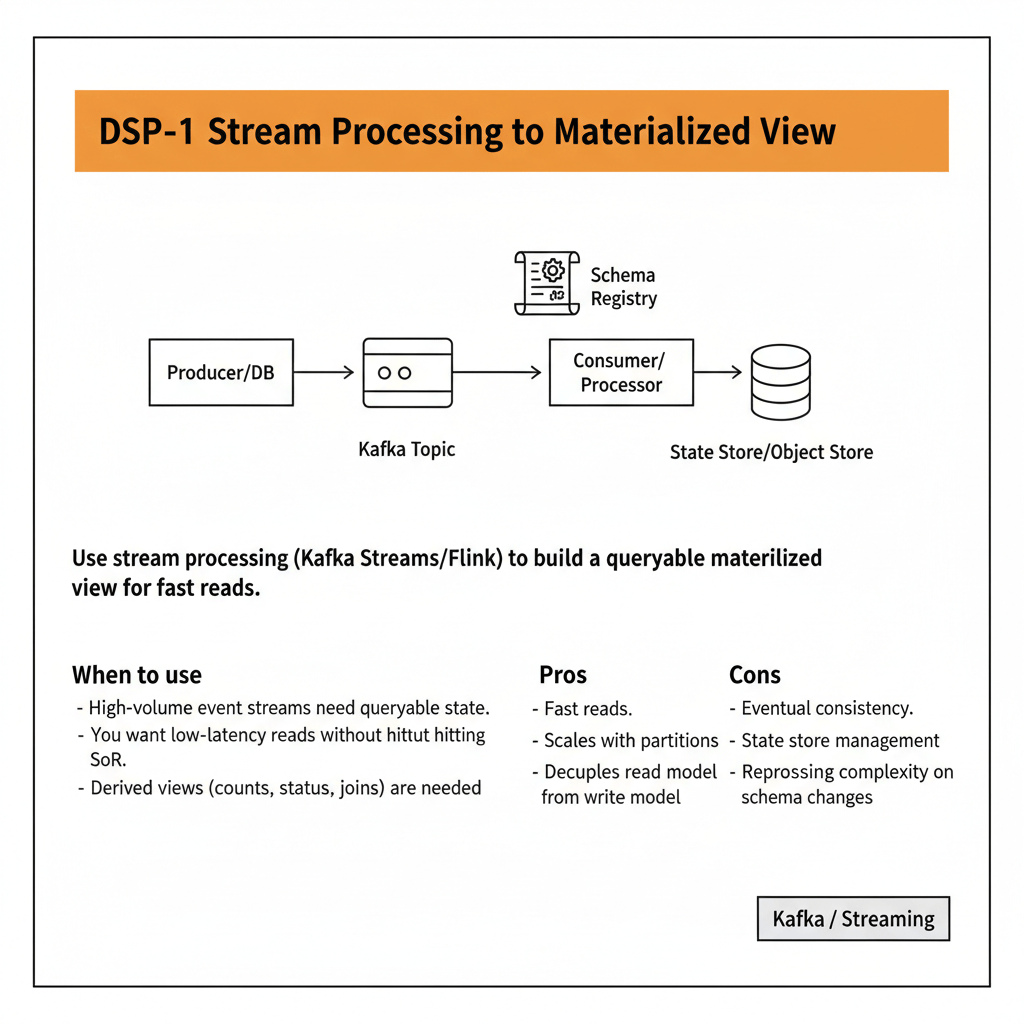

DSP-1: Stream Processing to Materialized View

Use stream processing (Kafka Streams/Flink) to build a queryable materialized view for fast reads.

When to use

- High-volume event streams need queryable state.

- You want low-latency reads without hitting SoR.

- Derived views (counts, status, joins) are needed.

Pros

- Fast reads.

- Scales with partitions.

- Decouples read model from write model.

Cons

- Eventual consistency.

- State store management.

- Reprocessing complexity on schema changes.

PlantUML

@startuml

title Stream Processing to Materialized View

participant "Kafka Topic" as Kafka_Topic

participant "Stream Processor" as Stream_Processor

participant "State Store/DB" as State_Store_DB

participant "API" as API

Kafka_Topic -> Stream_Processor: Consume events

Stream_Processor -> State_Store_DB: Update materialized view

API -> State_Store_DB: Query view

API -> API: Return result

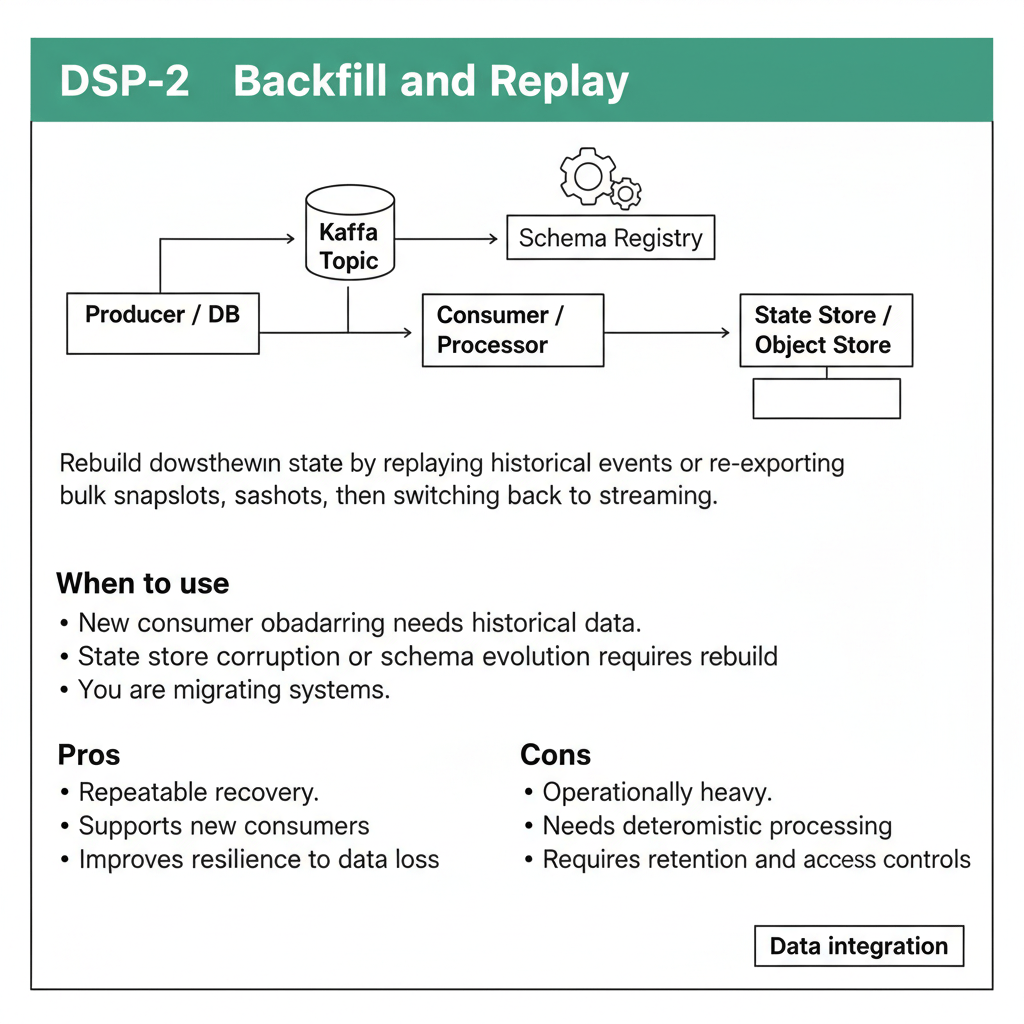

@endumlDSP-2: Backfill and Replay

Rebuild downstream state by replaying historical events or re-exporting bulk snapshots, then switching back to streaming.

When to use

- New consumer onboarding needs historical data.

- State store corruption or schema evolution requires rebuild.

- You are migrating systems.

Pros

- Repeatable recovery.

- Supports new consumers.

- Improves resilience to data loss.

Cons

- Operationally heavy.

- Needs deterministic processing.

- Requires retention and access controls.

PlantUML

@startuml

title Backfill and Replay

actor "Ops" as Ops

participant "Bulk Snapshot Export" as Bulk_Snapshot_Export

participant "Kafka Topic" as Kafka_Topic

participant "Consumer" as Consumer

Ops -> Bulk_Snapshot_Export: Generate snapshot

Bulk_Snapshot_Export -> Consumer: Load snapshot (seed)

Kafka_Topic -> Consumer: Replay events from point-in-time

Consumer -> Consumer: Switch to live consumption

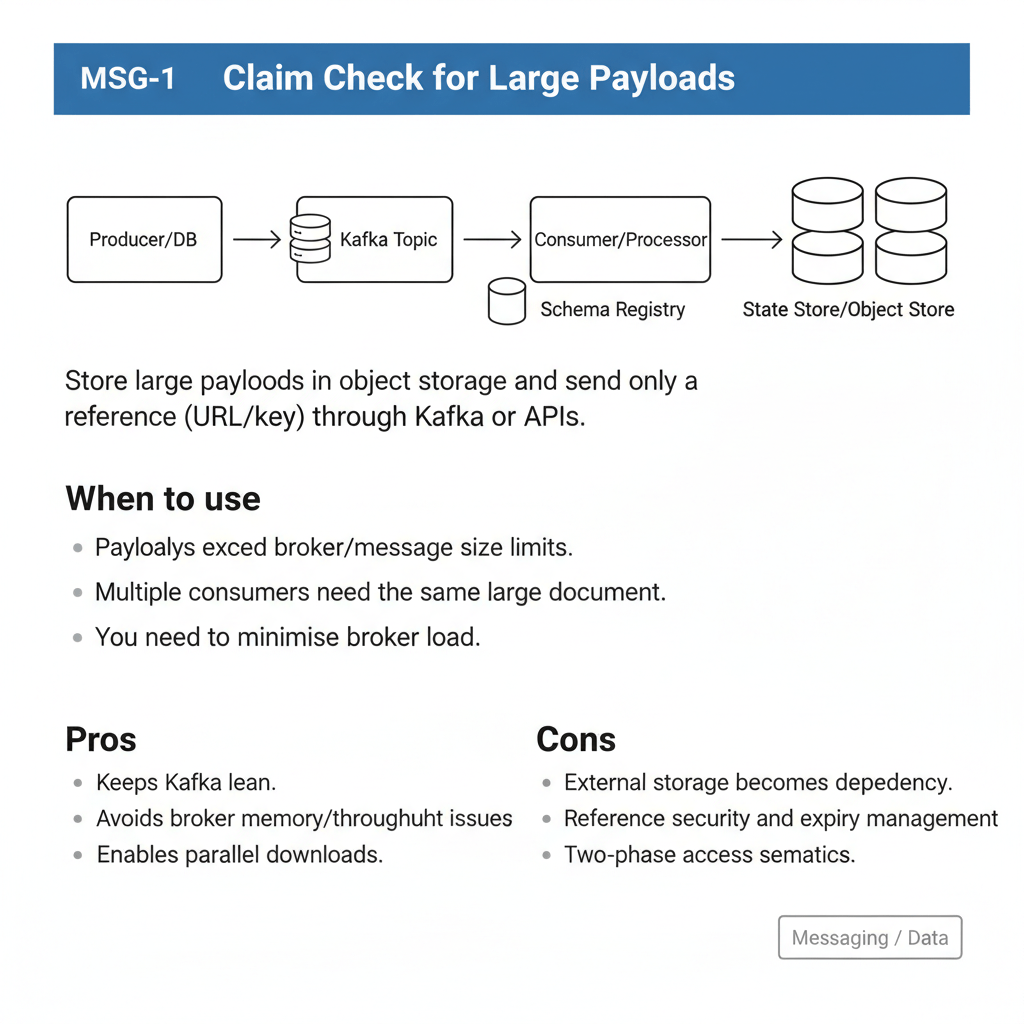

@endumlMSG-1: Claim Check for Large Payloads

Store large payloads in object storage and send only a reference (URL/key) through Kafka or APIs.

When to use

- Payloads exceed broker/message size limits.

- Multiple consumers need the same large document.

- You need to minimise broker load.

Pros

- Keeps Kafka lean.

- Avoids broker memory/throughput issues.

- Enables parallel downloads.

Cons

- External storage becomes dependency.

- Reference security and expiry management.

- Two-phase access semantics.

PlantUML

@startuml

title Claim Check for Large Payloads

participant "Producer" as Producer

participant "Object Store" as Object_Store

participant "Kafka Topic" as Kafka_Topic

participant "Consumer" as Consumer

Producer -> Object_Store: PUT large payload -> objectKey

Producer -> Kafka_Topic: Publish event with objectKey

Kafka_Topic -> Consumer: Deliver event

Consumer -> Object_Store: GET objectKey

@enduml- KAF-1 — Transactional Outbox + CDC

- KAF-2 — Idempotent Consumer

- KAF-3 — Retry with Backoff + Dead Letter Queue

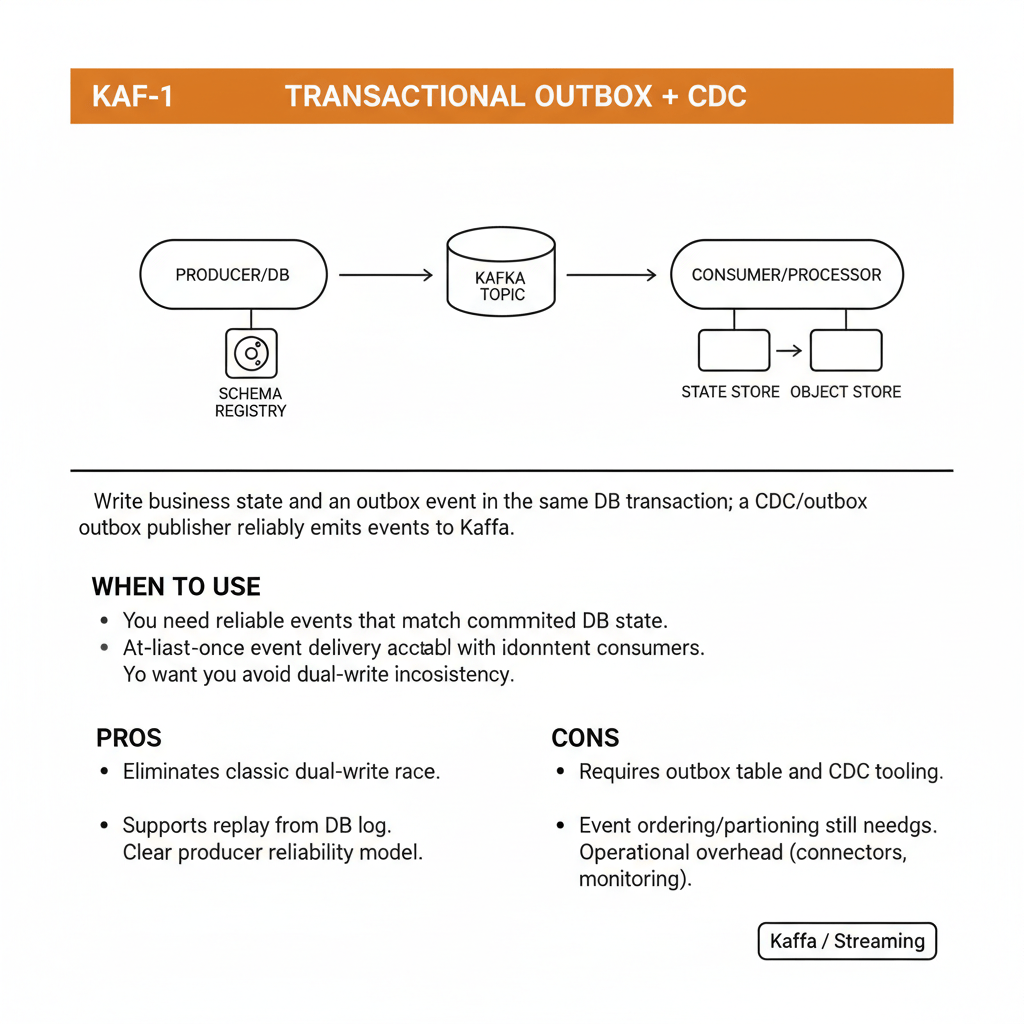

KAF-1: Transactional Outbox + CDC

Write business state and an outbox event in the same DB transaction; a CDC/outbox publisher reliably emits events to Kafka.

When to use

- You need reliable events that match committed DB state.

- At-least-once event delivery is acceptable with idempotent consumers.

- You want to avoid dual-write inconsistency.

Pros

- Eliminates classic dual-write race.

- Supports replay from DB log.

- Clear producer reliability model.

Cons

- Requires outbox table and CDC tooling.

- Event ordering/partitioning still needs design.

- Operational overhead (connectors, monitoring).

PlantUML

@startuml

title Transactional Outbox + CDC

participant "Service" as Service

participant "DB" as DB

participant "CDC/Outbox Publisher" as CDC_Outbox_Publisher

participant "Kafka Topic" as Kafka_Topic

participant "Consumers" as Consumers

Service -> DB: TX: update state + insert OutboxEvent

CDC_Outbox_Publisher -> DB: Read new outbox rows / log

CDC_Outbox_Publisher -> Kafka_Topic: Produce event

Kafka_Topic -> Consumers: Deliver event

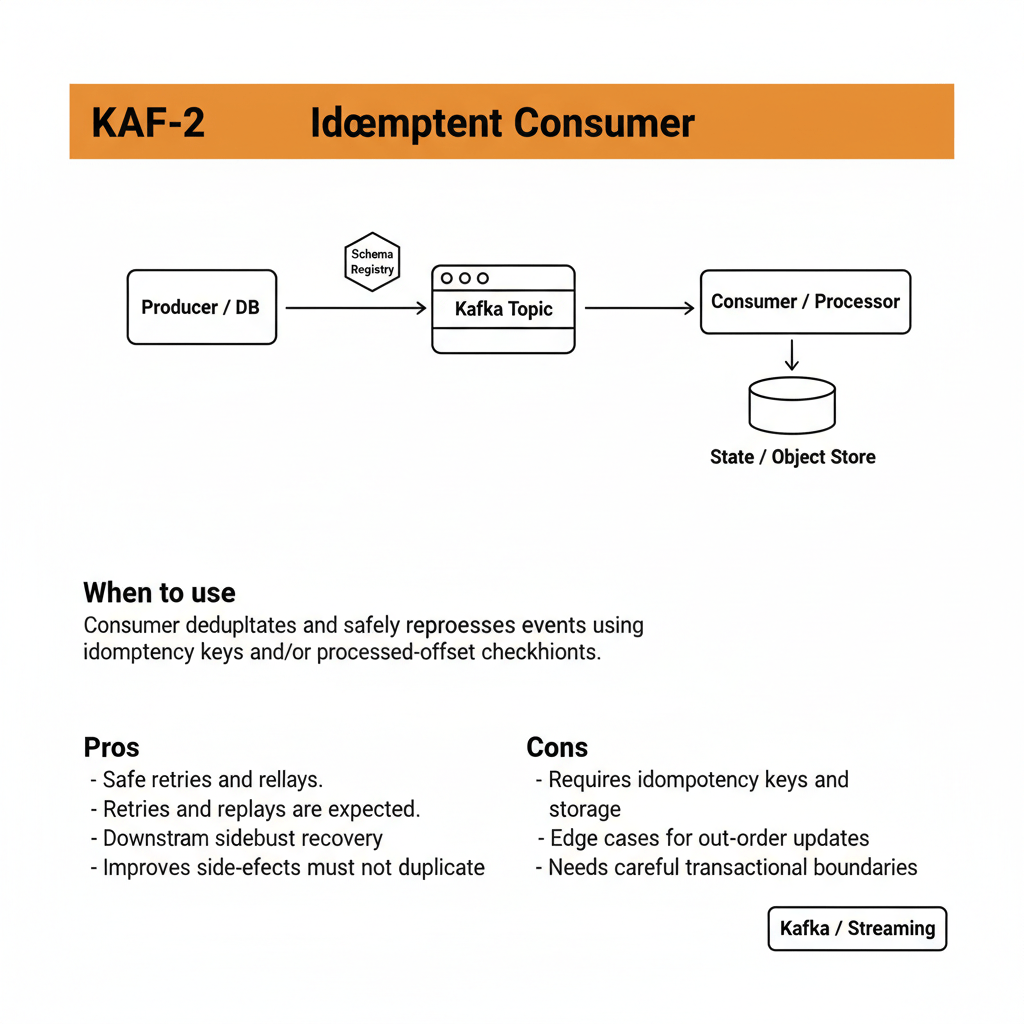

@endumlKAF-2: Idempotent Consumer

Consumer deduplicates and safely reprocesses events using idempotency keys and/or processed-offset checkpoints.

When to use

- At-least-once delivery is used (default for Kafka consumers).

- Retries and replays are expected.

- Downstream side-effects must not duplicate.

Pros

- Safe retries and replays.

- Enables robust recovery.

- Improves correctness under failures.

Cons

- Requires idempotency keys and storage.

- Edge cases for out-of-order updates.

- Needs careful transactional boundaries.

PlantUML

@startuml

title Idempotent Consumer

participant "Kafka Topic" as Kafka_Topic

participant "Consumer" as Consumer

participant "Idempotency Store" as Idempotency_Store

participant "Downstream System" as Downstream_System

Kafka_Topic -> Consumer: Event(key, idempotencyId)

Consumer -> Idempotency_Store: Check/record idempotencyId

Consumer -> Downstream_System: Apply side-effect

Consumer -> Kafka_Topic: Commit offset

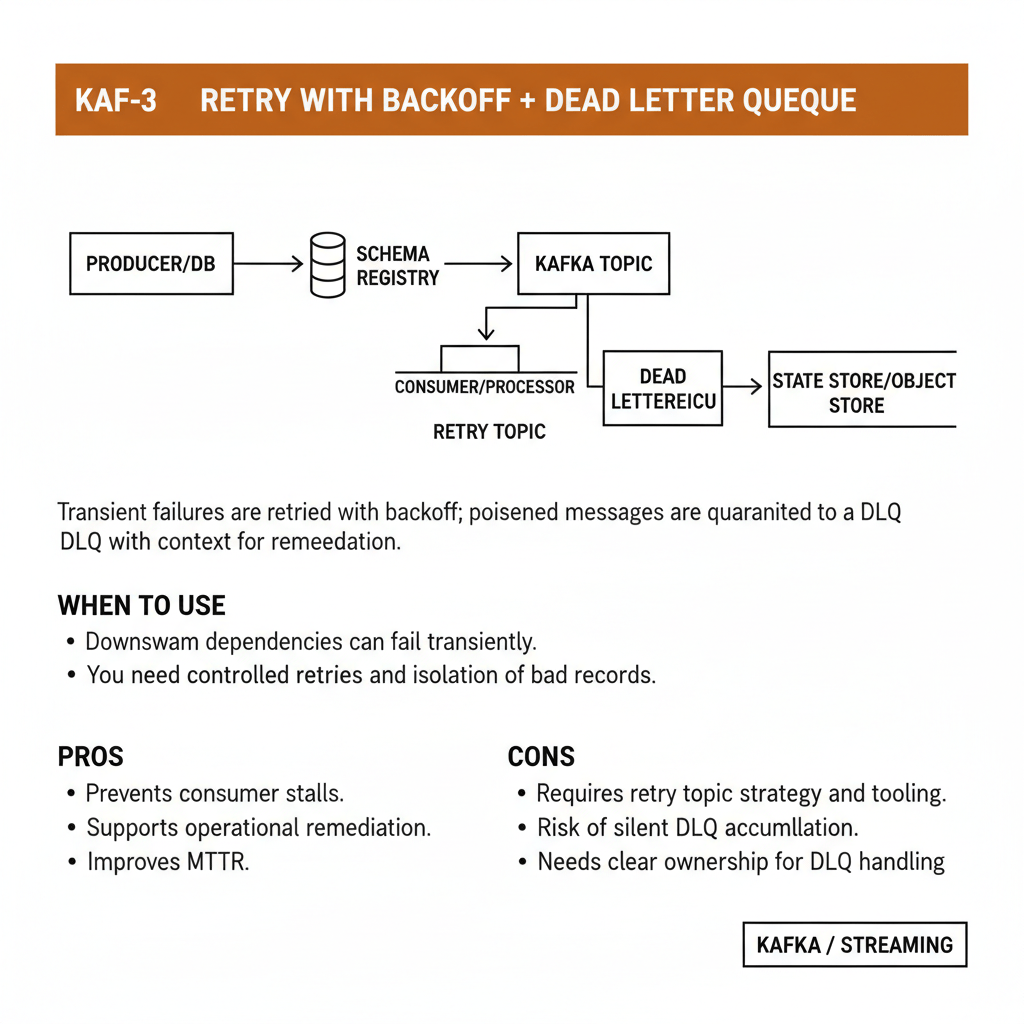

@endumlKAF-3: Retry with Backoff + Dead Letter Queue

Transient failures are retried with backoff; poisoned messages are quarantined to a DLQ with context for remediation.

When to use

- Downstream dependencies can fail transiently.

- You need controlled retries and isolation of bad records.

Pros

- Prevents consumer stalls.

- Supports operational remediation.

- Improves MTTR.

Cons

- Requires retry topic strategy and tooling.

- Risk of silent DLQ accumulation.

- Needs clear ownership for DLQ handling.

PlantUML

@startuml

title Retry with Backoff + Dead Letter Queue

participant "Consumer" as Consumer

participant "Retry Topic(s)" as Retry_Topic_s_

participant "DLQ" as DLQ

participant "Ops/Remediation" as Ops_Remediation

Consumer -> Retry_Topic_s_: Publish for delayed retry

Consumer -> DLQ: Publish poisoned message + error

Ops_Remediation -> DLQ: Investigate and reprocess/fix

@endumlSummary

More data patterns to come and this is an evolving post and I will look to fix the images! I am reading Data Intensive Patterns book as working with some amazing data engineers to bring key patterns to life